Visualizations

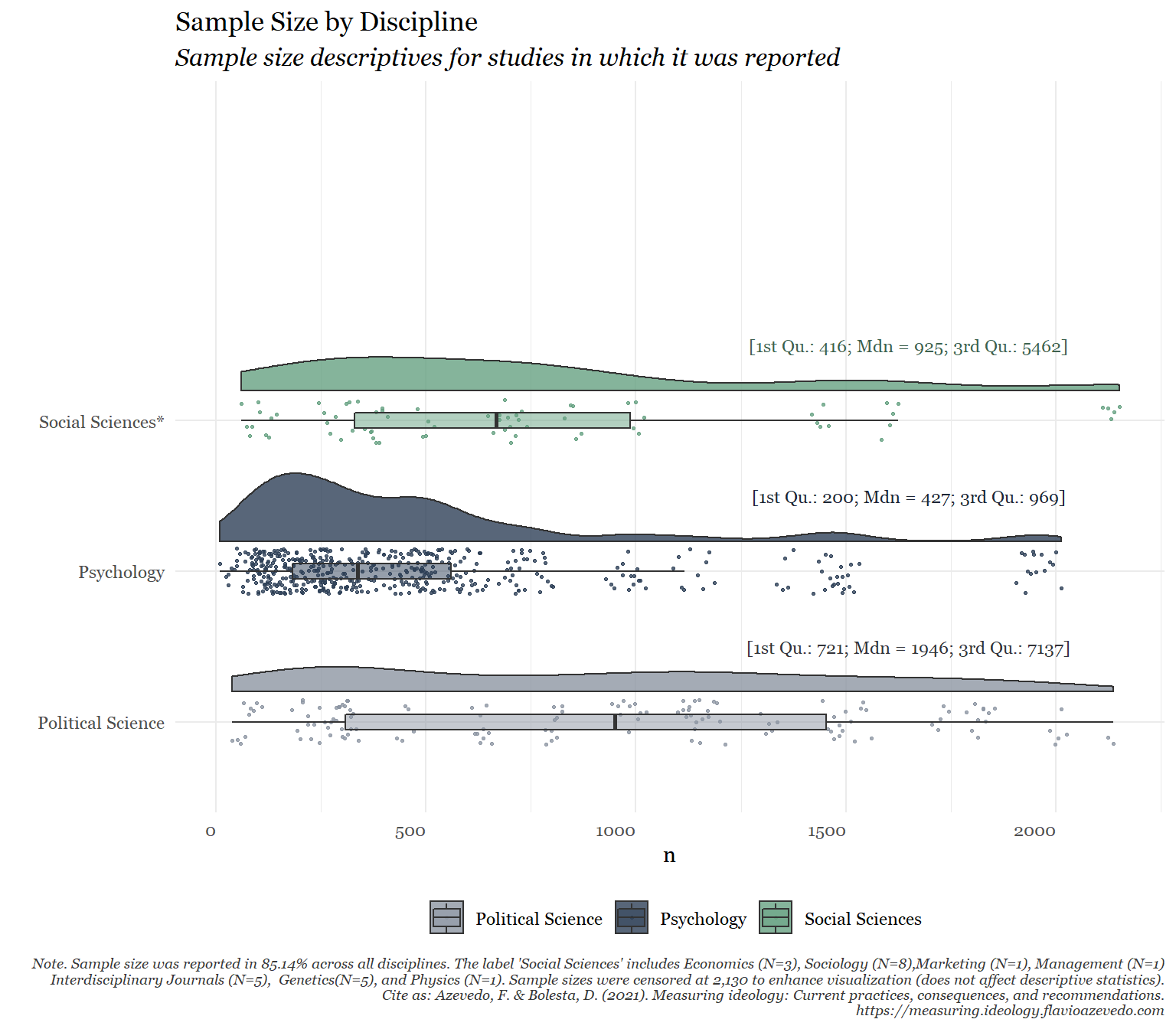

The Measurement of Ideology

These visualizations describe the results of our review of the measurement practices in the study of ideology across social sciences. We found 222 instruments designed to measure one’s levels of ideology since 1929. We found that we are creating more and more scales, albeit only in WEIRD countries. We also found a high number of unique, non-validated, and on-the-fly measures. The majority of found instruments also lacked the reporting of items’ text, techniques applied, and psychometric properties. Altogether, there is evidence of low replicability and generalizability. In sum, the long-standing measurement practices in the scientific study of ideology are antithetical to the goal of theory development and accumulation of knowledge. These visualizations were made in collaboration with Deliah Bolesta.

Multiverse Analysis

The plot summarizes the results of thousands of regressions showing which predictors of distrust of climate science survive most often when competing for explained variance. As indicated in Panel C, Ideology (operational & symbolic), along with Social Dominance Orientation, are most predictive. This plot also shows that ideological scales seem to work differentially, even if they are measures of the same construct.

Demographics of Support for Black Lives matter

The plot summarizes the percentage of support for the Black Lives Matter movement across societal groups and subgroups (Age, Education, Employment, Gender, Ideology, Income, Marital Status, Party ID, Race/Ethnicity, Religious affiliation, and Urbanicity) based on 17 probability-based nationally representative datasets (Npooled=31,779). We also found and meta-analyzed 37 predictors for which individual meta-analyses were conducted to estimate the strength and robustness of their associations. Our results suggest that there is a near-perfect match between BLM opposition and positive attitudes towards American institutions deeply rooted in systemic racism. Lastly, we tried to understand which predictors were more robust of BLM support and found that symbolic ideology, race/ethnicity, gender, and Party ID (respectively) were consistently associated with BLM support. The present work contributes to a broad categorization of correlates of BLM support across social, psychological, and political domains.

Multiple Meta-analyses

The plot displays a summary of the results of 30 meta-analyses from 43 publications (N = 207,360) between Big Five personality traits and individual differences in political trust and involvement in politics. The meta-analysis revealed substantial correlations between involvement and openness (+), extraversion (+), and neuroticism (−), but only small correlations between trust and the Big Five.

Check out the full paper, filled with extra goodies including two pre-registered studies (N2 = 988 and N3 = 795) indicating political involvement is more strongly linked to the Big Five than political trust.

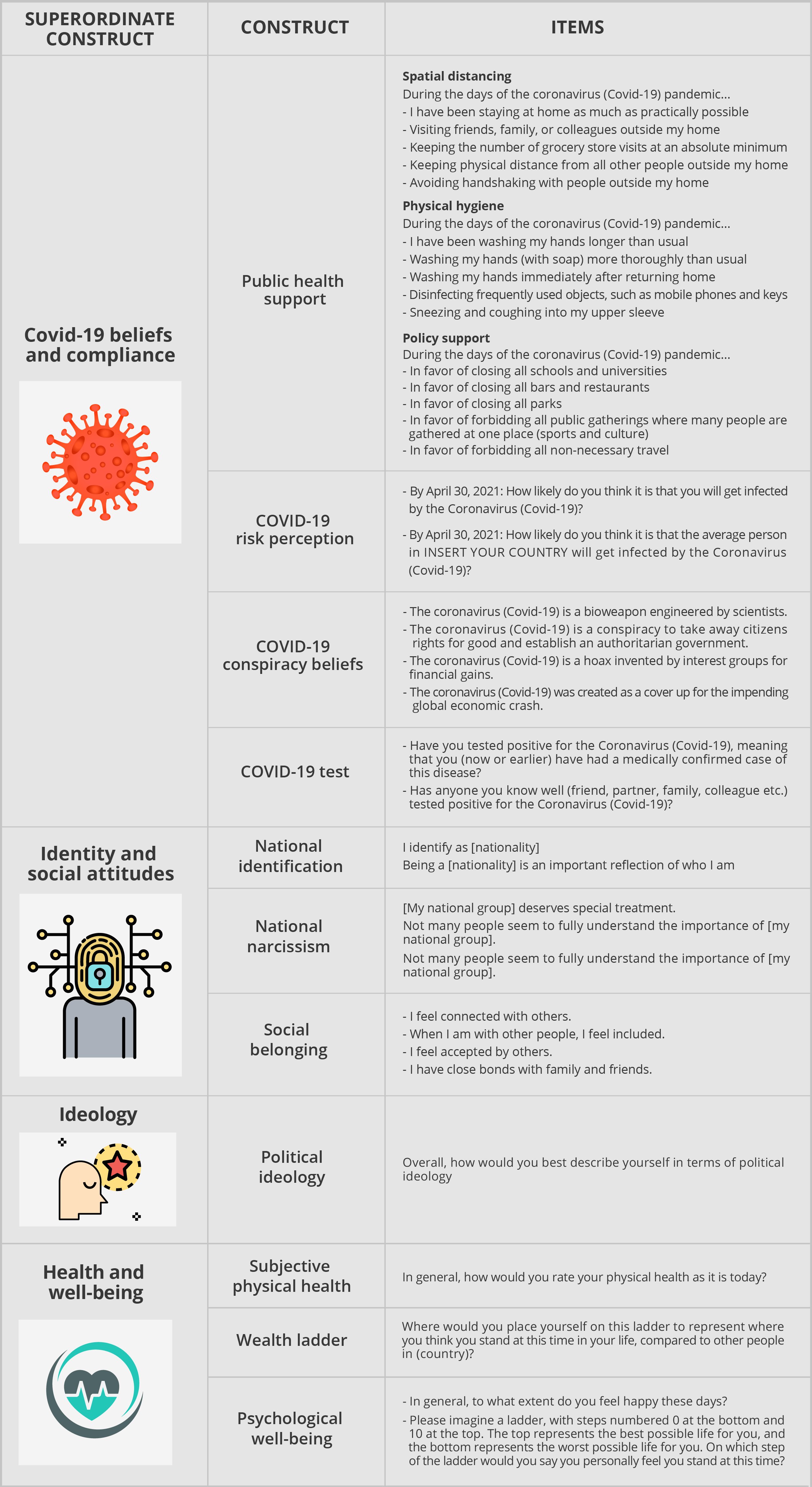

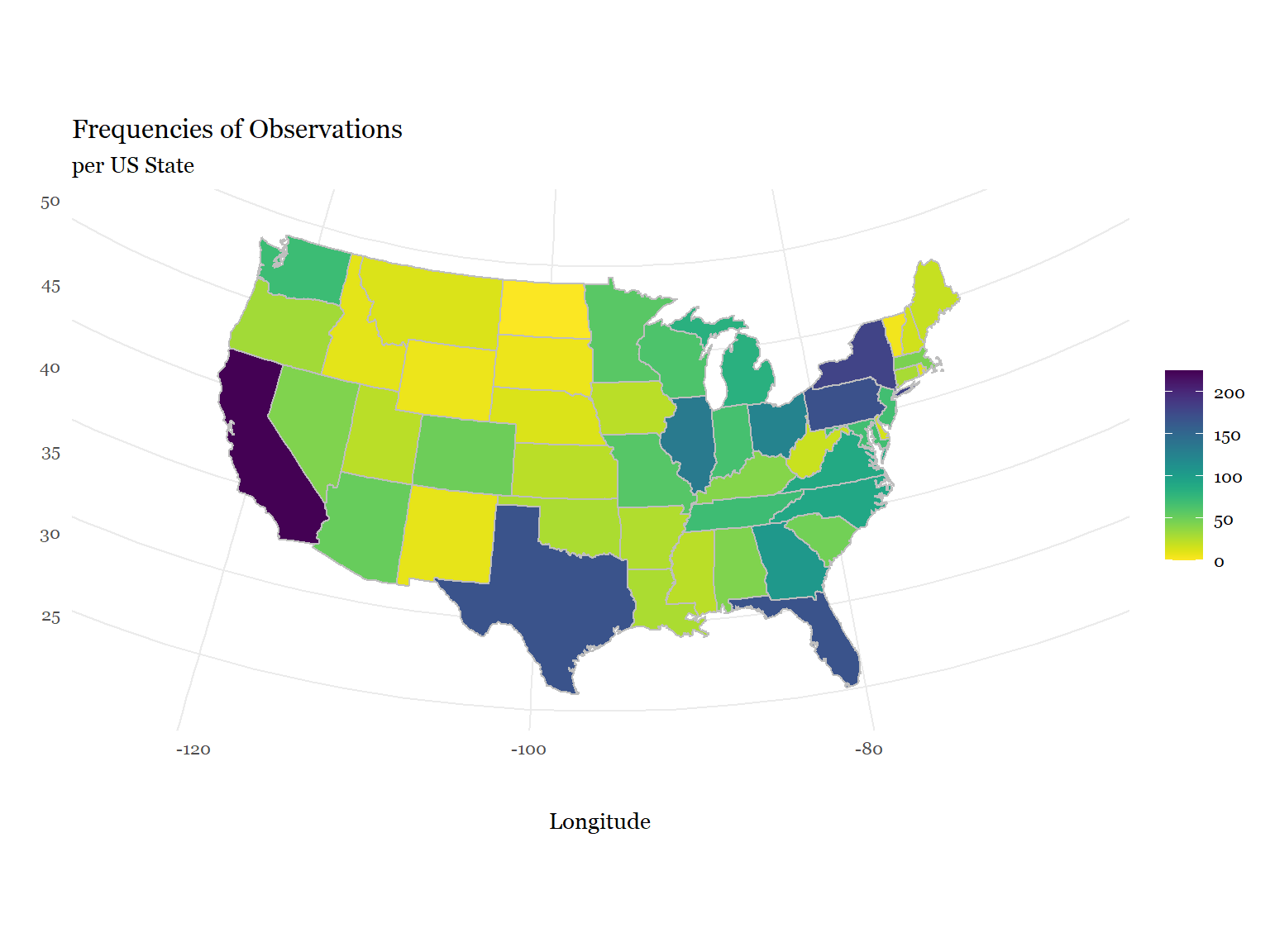

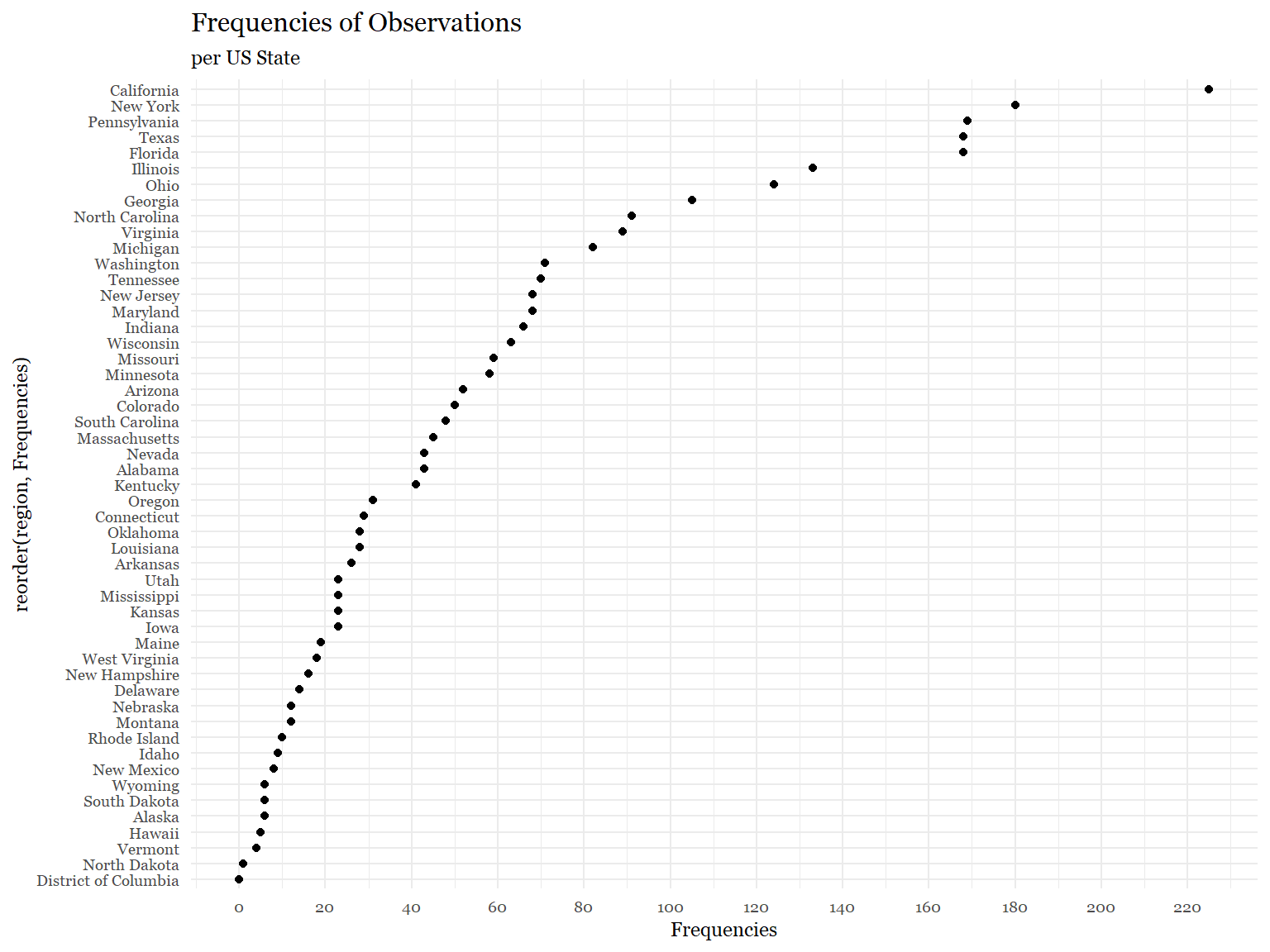

An International Collaboration on the Social & Moral Psychology of COVID-19

The visualizations to the right describe the data that over 250 researchers collected from over 50,000 citizens in 69 countries. These visualizations display the characteristics of the data, measured constructs, and data quality. These plots will figure in our paper presenting the data which is being currently drafted. The goal of International Collaboration on the Social & Moral Psychology of COVID-19 is to bring together scholars from around the globe to examine psychological factors underlying the attitudes and behavioral intentions related to COVID19. We focused on factors such as beliefs in conspiracy theories, cooperation, risk perception, social belonging, intellectual humility, national identification, collective narcissism, moral identity, political ideology, self-esteem, and cognitive reflection. The goal was to generate a massive multi-national sample of representative samples that can serve as a public good for the scientific community. We are operating under a model of open science where all materials and data are open.

Check out the full paper (Open Access) and our Shiny App. We published our main paper in Nature Communications (here’s a summary of the findings). Learn more about the project.

PPBS | The Psychology of political behavior Studies

The Psychology of Political Behavior Studies (PPBS) are an ongoing series of quota-based national cross-sections, and its replication analogs, containing a set of repeating core questions on the topics of social and political attitudes, values, voting, candidate preferences, and political participation. PPBS leverages 12 datasets, collected through professional survey companies, for a combined N of 21.107 interviews. PPBS includes four nationally representative cross-sections of the American population (N=7.259) during the last three elections in the US (i.e., Presidential 2016 and 2020, and Midterms 2018), their replication/confirmatory analogs (N=11.582) in pre- and post-election studies, with added survey-specific repeated measures recontacts (N=2.215) across three time-points, allowing for both within and between research designs.

Funds for PPBS 2022 have been acquired! 🎉🥳🎊

Taken together, PBBS has surveyed more than 60 political and psychological constructs, many of which use its full instruments, allowing for the testing of competing explanations of the psychology of political behavior.

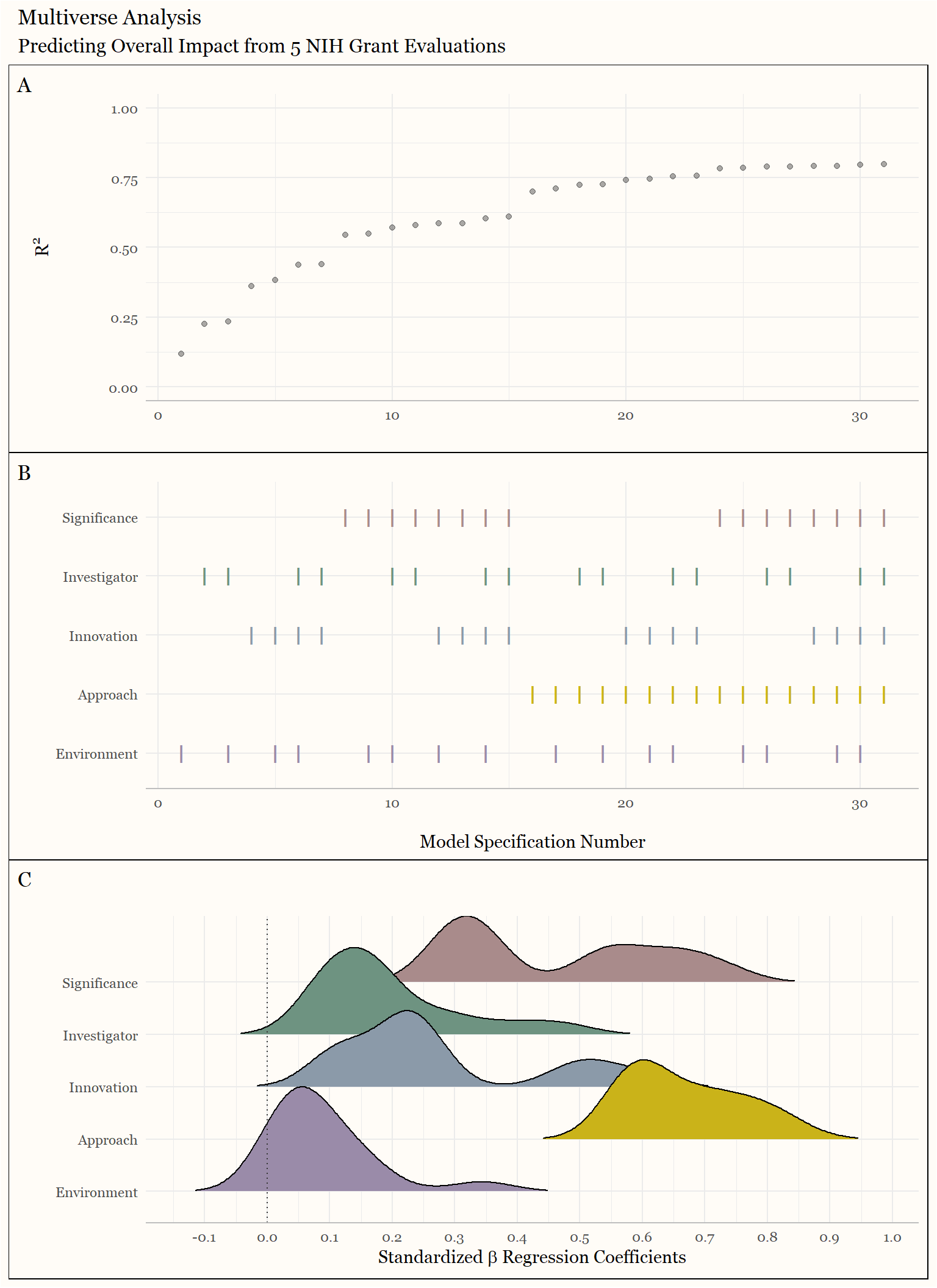

Understanding NIH Grant Evaluations

The National Institutes of Health uses small groups of scientists to judge the quality of the grant proposals that they receive, and these quality judgments form the basis of its funding decisions. In order for this system to fund the best science, the subject experts must, at a minimum, agree as to what counts as a “quality” proposal. We investigated the degree of agreement by leveraging data from a recent experiment with 412 scientist reviewers, each of whom reviewed 3 proposals, and 48 NIH R01 proposals (half-funded and half unfunded), each of which was reviewed by between 21 and 30 reviewers. Across all dimensions of NIH’s official rubric, we find low agreement among reviewers in their judgments of scientific merit. For judgments of Overall Impact, which has the greatest weight in funding decisions, we estimate that three reviewers yield a reliability .2, and 12 reviewers would be required to bring this reliability up to .5. Supplemental analyses found that reviewers are even less reliable in the language they use to describe proposals.